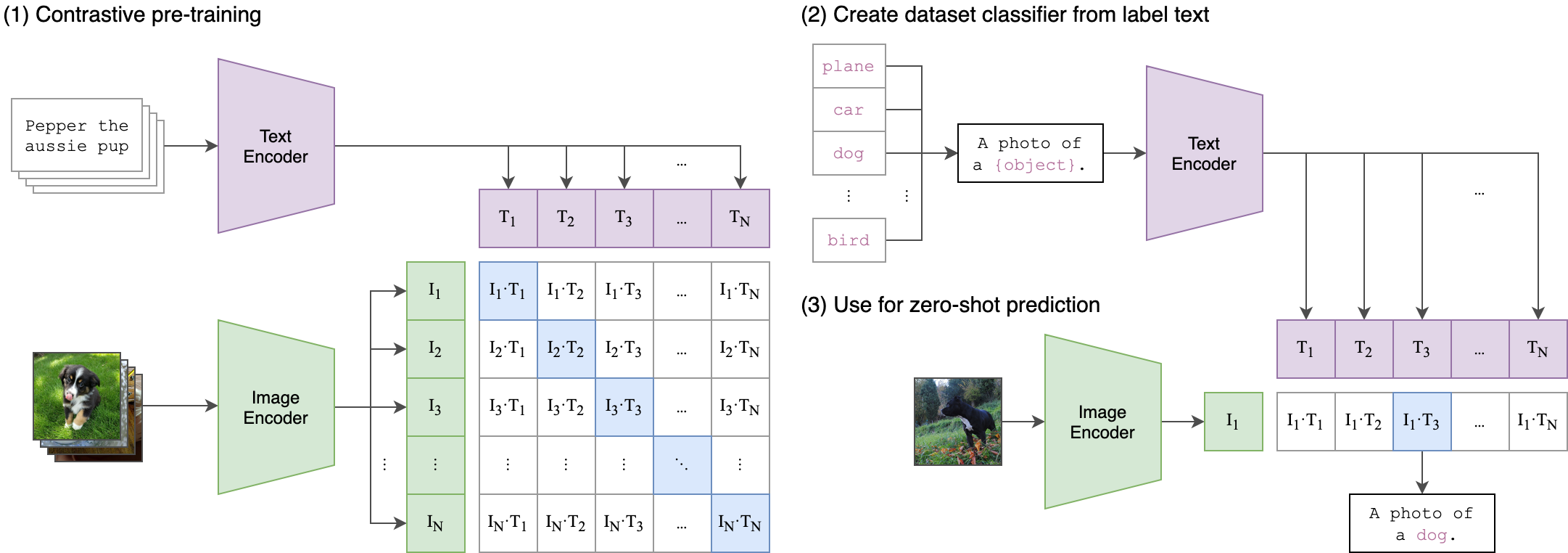

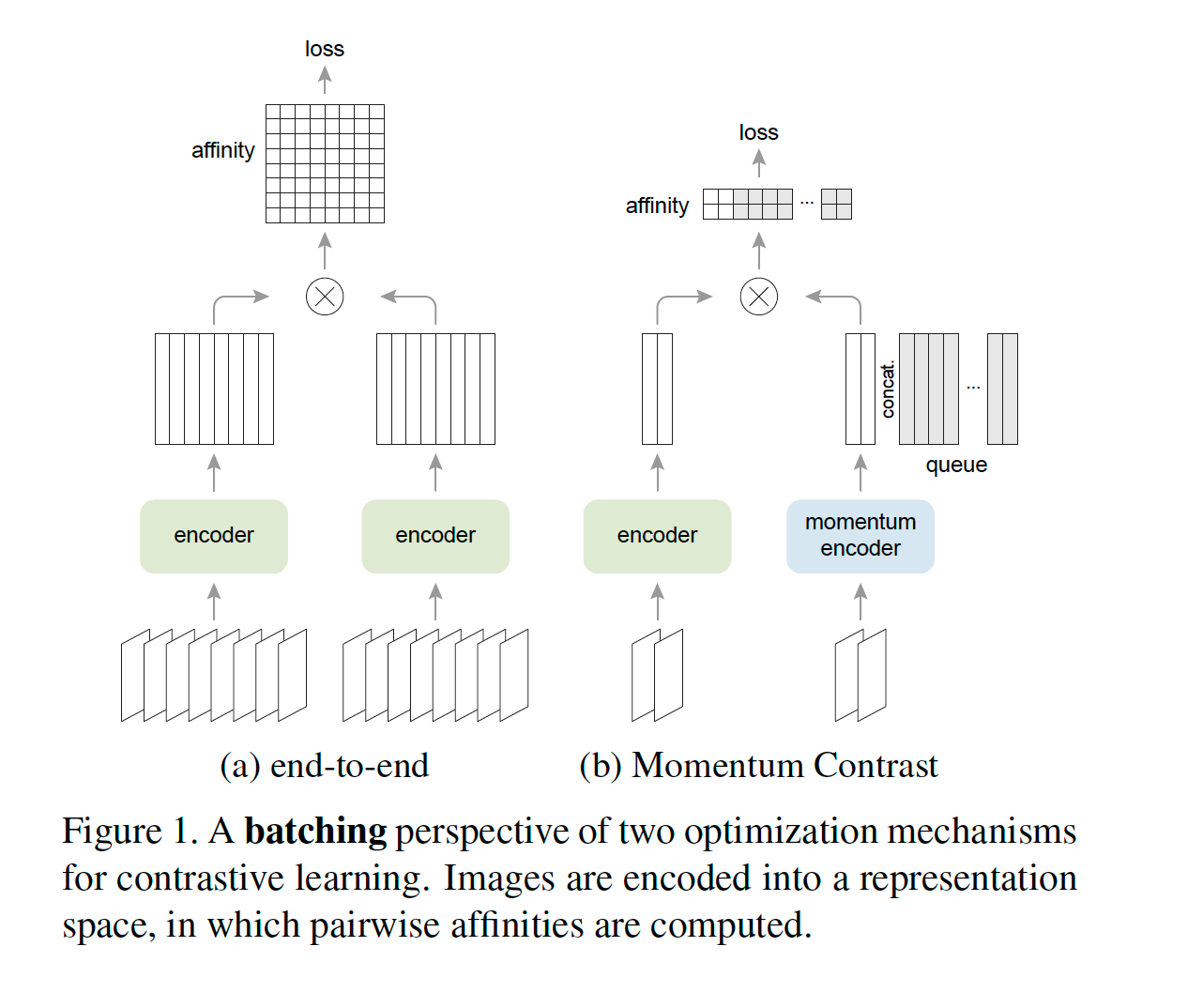

This module combines CLIP and MoCo for increasing negative samples. This is useful when there is no available compute such as GPUs with large memory to support large batch sizes or multi-gpu machines to leverage distributed infonce loss implementation.

class ClipTokenizer[source]

ClipTokenizer(context_length=77) ::DisplayedTransform

Tokenizer from https://github.com/openai/CLIP/blob/main/clip/simple_tokenizer.py

vitb32_config[source]

vitb32_config(input_res,context_length,vocab_size)

ViT-B/32 configuration, uses 32x32 patches

vitl14_config[source]

vitl14_config(input_res,context_length,vocab_size)

ViT-L/14 configuration, uses 14x14 patches

class Bottleneck[source]

Bottleneck(inplanes,planes,stride=1) ::Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

class AttentionPool2d[source]

AttentionPool2d(spacial_dim:int,embed_dim:int,num_heads:int,output_dim:int=None) ::Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

class ModifiedResNet[source]

ModifiedResNet(layers,output_dim,heads,input_resolution=224,width=64) ::Module

A ResNet class that is similar to torchvision's but contains the following changes:

- There are now 3 "stem" convolutions as opposed to 1, with an average pool instead of a max pool.

- Performs anti-aliasing strided convolutions, where an avgpool is prepended to convolutions with stride > 1

- The final pooling layer is a QKV attention instead of an average pool

class LayerNorm[source]

LayerNorm(normalized_shape:Union[int, List[int],Size\], **eps**:float=*1e-05*, **elementwise_affine**:bool=*True*, **device**=*None*, **dtype**=*None*) :: [LayerNorm`](/self_supervised/21 - clip-moco.html#LayerNorm)

Subclass torch's LayerNorm to handle fp16.

class QuickGELU[source]

QuickGELU() ::Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

class ResidualAttentionBlock[source]

ResidualAttentionBlock(d_model:int,n_head:int,attn_mask:Tensor=None) ::Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

class Transformer[source]

Transformer(width:int,layers:int,heads:int,attn_mask:Tensor=None,checkpoint=False,checkpoint_nchunks=2) ::Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

class VisualTransformer[source]

VisualTransformer(input_resolution:int,patch_size:int,width:int,layers:int,heads:int,output_dim:int, **kwargs) ::Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

class CLIPMOCO[source]

CLIPMOCO(embed_dim:int,image_resolution:int,vision_layers:Union[Tuple[int, int, int, int],int\], **vision_width**:int, **vision_patch_size**:int, **context_length**:int, **vocab_size**:int, **transformer_width**:int, **transformer_heads**:int, **transformer_layers**:int, **K**=*4096*, **m**=*0.999*, **\*\*kwargs**) ::Module`

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call :meth:to, etc.

:ivar training: Boolean represents whether this module is in training or evaluation mode. :vartype training: bool

| Type | Default | Details | |

|---|---|---|---|

embed_dim |

int |

No Content | |

image_resolution |

int |

vision | |

vision_layers |

Tuple[int, int, int, int], int] |

No Content | |

vision_width |

int |

No Content | |

vision_patch_size |

int |

No Content | |

context_length |

int |

text | |

vocab_size |

int |

No Content | |

transformer_width |

int |

No Content | |

transformer_heads |

int |

No Content | |

transformer_layers |

int |

No Content | |

K |

int |

4096 |

No Content |

m |

float |

999 |

No Content |

kwargs |

No Content |

A useful proxy metric for tracking training performance and convergence.

class RetrievalAtK[source]

RetrievalAtK(k=20, **kwargs) ::AccumMetric

Stores predictions and targets on CPU in accumulate to perform final calculations with func.

class CLIPMOCOTrainer[source]

CLIPMOCOTrainer(after_create=None,before_fit=None,before_epoch=None,before_train=None,before_batch=None,after_pred=None,after_loss=None,before_backward=None,before_step=None,after_cancel_step=None,after_step=None,after_cancel_batch=None,after_batch=None,after_cancel_train=None,after_train=None,before_validate=None,after_cancel_validate=None,after_validate=None,after_cancel_epoch=None,after_epoch=None,after_cancel_fit=None,after_fit=None) ::Callback

MoCo Loss for CLIP. Can be used with or without DistributedDataParallel

num2txt = {'3': 'three', '7': 'seven'}

def num_to_txt(o): return num2txt[o]

def dummy_targ(o): return 0 # loss func is not called without it

path = untar_data(URLs.MNIST_TINY)

items = get_image_files(path)

clip_tokenizer = ClipTokenizer()

tds = Datasets(items, [PILImage.create, [parent_label, num_to_txt], dummy_targ], n_inp=2, splits=GrandparentSplitter()(items))

dls = tds.dataloaders(bs=2, after_item=[Resize(224), clip_tokenizer, ToTensor()], after_batch=[IntToFloatTensor()], device='cpu')

vitb32_config_dict = vitb32_config(224, clip_tokenizer.context_length, clip_tokenizer.vocab_size)

clip_model = CLIPMOCO(K=4096,m=0.999, **vitb32_config_dict, checkpoint=False, checkpoint_nchunks=0)

learner = Learner(dls, clip_model, loss_func=noop, cbs=[CLIPMOCOTrainer(), ShortEpochCallback(0.001)],

metrics=[RetrievalAtK(k=5),

RetrievalAtK(k=20),

RetrievalAtK(k="mean"),

RetrievalAtK(k="median")])

learner.summary()

CLIPMOCO (Input shape: 2 x torch.Size([2, 77]))

============================================================================

Layer (type) Output Shape Param # Trainable

============================================================================

2 x 768 x 7 x 7

Conv2d 2359296 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 True

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 True

LayerNorm 1536 True

LayerNorm 1536 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 True

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 77 x 512

Embedding 25296896 True

LayerNorm 1024 True

____________________________________________________________________________

2 x 768 x 7 x 7

Conv2d 2359296 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

____________________________________________________________________________

2 x 1 x 3072

Linear 2362368 False

QuickGELU

____________________________________________________________________________

2 x 1 x 768

Linear 2360064 False

LayerNorm 1536 False

LayerNorm 1536 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

LayerNorm 1024 False

____________________________________________________________________________

2 x 1 x 2048

Linear 1050624 False

QuickGELU

____________________________________________________________________________

2 x 1 x 512

Linear 1049088 False

LayerNorm 1024 False

____________________________________________________________________________

Total params: 193,876,992

Total trainable params: 109,587,456

Total non-trainable params: 84,289,536

Optimizer used: <function Adam at 0x7fbd8d0189e0>

Loss function: <bound method CLIPMOCOTrainer.lf of CLIPMOCOTrainer>

Callbacks:

- TrainEvalCallback

- ShortEpochCallback

- CLIPMOCOTrainer

- Recorder

- ProgressCallback