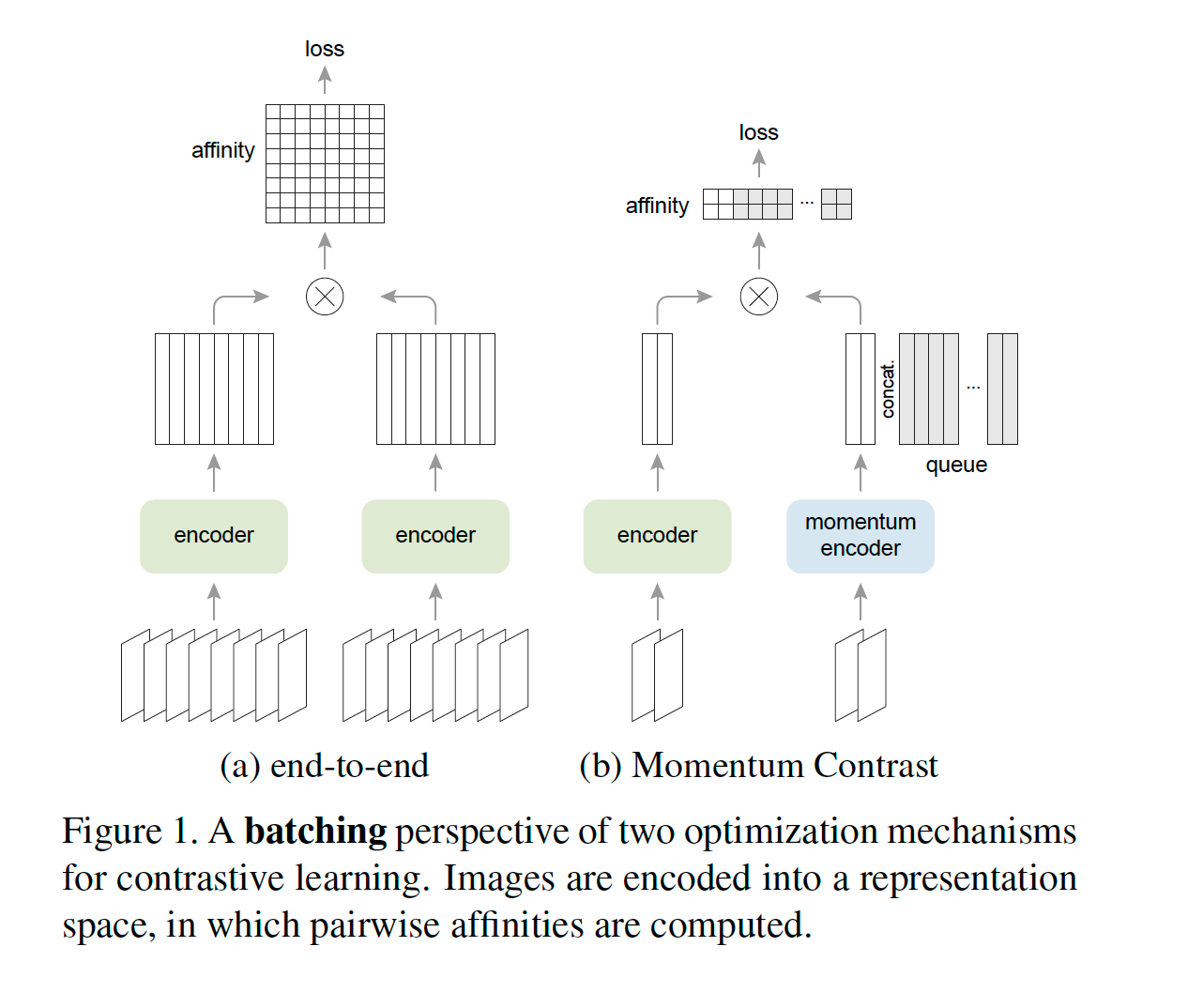

Absract (MoCo V2): Contrastive unsupervised learning has recently shown encouraging progress, e.g., in Momentum Contrast (MoCo) and SimCLR. In this note, we verify the effectiveness of two of SimCLR’s design improvements by implementing them in the MoCo framework. With simple modifications to MoCo— namely, using an MLP projection head and more data augmentation—we establish stronger baselines that outperform SimCLR and do not require large training batches. We hope this will make state-of-the-art unsupervised learning research more accessible. Code will be made public.

You can either use MoCoModel module to create a model by passing predefined encoder and projector models or you can use create_moco_model with just passing predefined encoder and expected input channels. In new MoCo paper, model consists of an encoder and a mlp projector following the SimCLR-v2 improvements.

You may refer to: official implementation

encoder = create_encoder("tf_efficientnet_b0_ns", n_in=3, pretrained=False, pool_type=PoolingType.CatAvgMax)

model = create_moco_model(encoder, hidden_size=2048, projection_size=128)

out = model(torch.randn((2,3,224,224))); out.shape

The following parameters can be passed;

- aug_pipelines list of augmentation pipelines List[Pipeline] created using functions from

self_supervised.augmentationsmodule. EachPipelineshould be set tosplit_idx=0. You can simply useget_moco_aug_pipelinesutility to get aug_pipelines. - K is queue size. For simplicity K needs to be a multiple of batch size and it needs to be less than total training data. You can try out different values e.g.

bs*2^kby varying k where bs i batch size. - m is momentum for key encoder update.

0.999is a good default according to the paper. - temp temperature scaling for cross entropy loss similar to

SimCLR

You may refer to official implementation

Our implementation doesn't uses shuffle BN and instead it uses current batch for both positives and negatives during loss calculation. This should handle the "signature" issue coming from batchnorm which is argued to be allowing model to cheat for same batch positives. This modification not only creates simplicity but also allows training with a single GPU. Official Shuffle BN implementation depends on DDP (DistributedDataParallel) and only supports multiple GPU environments. Unfortunately, not everyone has access to multiple GPUs and we hope with this modification MoCo will be more accessible now.

For more details about our proposed custom implementation you may refer to this Github issue.

MoCo algorithm uses 2 views of a given image, and MOCO callback expects a list of 2 augmentation pipelines in aug_pipelines.

You can simply use helper function get_moco_aug_pipelines() which will allow augmentation related arguments such as size, rotate, jitter...and will return a list of 2 pipelines, which we can be passed to the callback. This function uses get_multi_aug_pipelines which then get_batch_augs. For more information you may refer to self_supervised.augmentations module.

Also, you may choose to pass your own list of aug_pipelines which needs to be List[Pipeline, Pipeline] where Pipeline(..., split_idx=0). Here, split_idx=0 forces augmentations to be applied in training mode.

path = untar_data(URLs.MNIST_TINY)

items = get_image_files(path)

tds = Datasets(items, [PILImageBW.create, [parent_label, Categorize()]], splits=GrandparentSplitter()(items))

dls = tds.dataloaders(bs=8, after_item=[ToTensor(), IntToFloatTensor()], device='cpu')

fastai_encoder = create_encoder('xresnet18', n_in=1, pretrained=False)

model = create_moco_model(fastai_encoder, hidden_size=1024, projection_size=128, bn=True)

aug_pipelines = get_moco_aug_pipelines(size=28, rotate=False, jitter=False, bw=False, blur=False, stats=None, cuda=False)

learn = Learner(dls, model, cbs=[MOCO(aug_pipelines=aug_pipelines, K=128, print_augs=True), ShortEpochCallback(0.001)])

learn.summary()

b = dls.one_batch()

learn._split(b)

learn.pred = learn.model(*learn.xb)

axes = learn.moco.show(n=5)

learn.fit(1)

learn.recorder.losses