As of preparing this module official CLIP repo is mainly structured for inference. This module adds the required changes for training keeping in mind all the tricks from the paper and the conversations from the github issues.

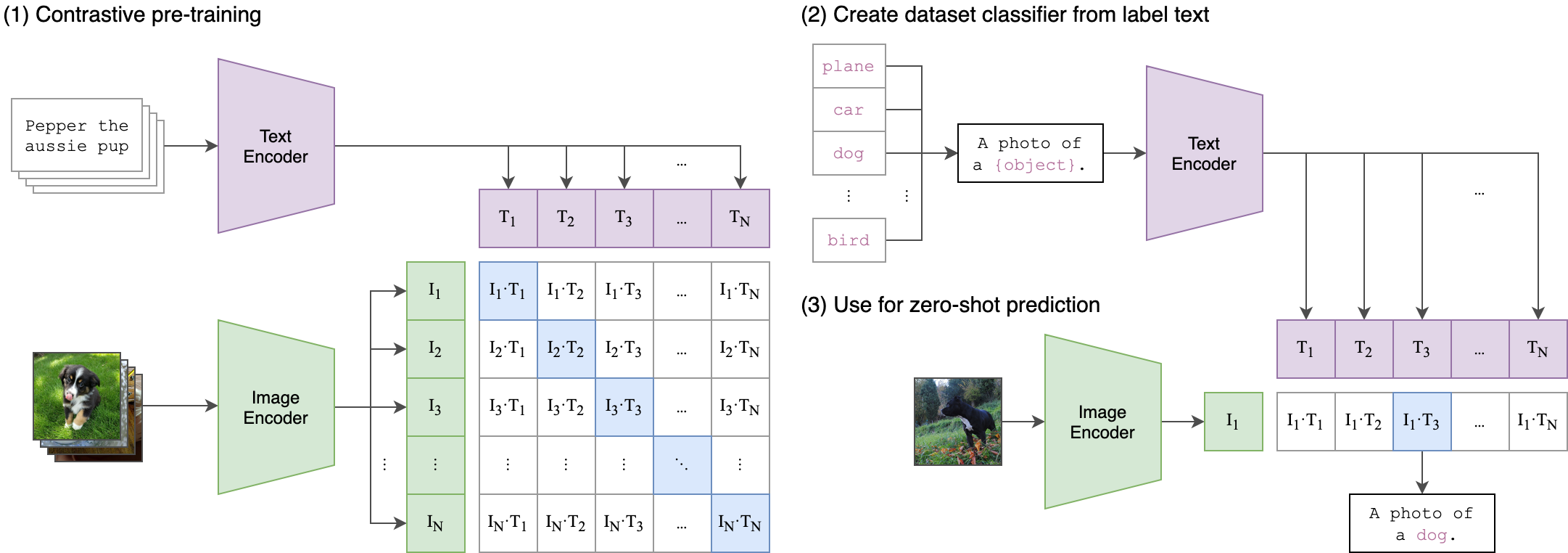

Absract: State-of-the-art computer vision systems are trained to predict a fixed set of predetermined object categories. This restricted form of supervision limits their generality and usability since additional labeled data is needed to specify any other visual concept. Learning directly from raw text about images is a promising alternative which leverages a much broader source of supervision. We demonstrate that the simple pre-training task of predicting which caption goes with which image is an efficient and scalable way to learn SOTA image representations from scratch on a dataset of 400 million (image, text) pairs collected from the internet. After pre-training, natural language is used to reference learned visual concepts (or describe new ones) enabling zero-shot transfer of the model to downstream tasks. We study the performance of this approach by benchmarking on over 30 different existing computer vision datasets, spanning tasks such as OCR, action recognition in videos, geo-localization, and many types of fine-grained object classification. The model transfers non-trivially to most tasks and is often competitive with a fully supervised baseline without the need for any dataset specific training. For instance, we match the accuracy of the original ResNet-50 on ImageNet zero-shot without needing to use any of the 1.28 million training examples it was trained on. We release our code and pre-trained model weights at https://github.com/OpenAI/CLIP.

A useful proxy metric for tracking training performance and convergence.

Training Tip: In my own experiments, using CLIPTrainer() leads to faster convergence than DistributedCLIPTrainer. You should combine CLIPTrainer, DistributedDataParallel, fp16 and ZeRO optimizer with maximum batch size that fits to your memory for optimal speed and performance.

num2txt = {'3': 'three', '7': 'seven'}

def num_to_txt(o): return num2txt[o]

def dummy_targ(o): return 0 # loss func is not called without it

path = untar_data(URLs.MNIST_TINY)

items = get_image_files(path)

clip_tokenizer = ClipTokenizer()

tds = Datasets(items, [PILImage.create, [parent_label, num_to_txt], dummy_targ], n_inp=2, splits=GrandparentSplitter()(items))

dls = tds.dataloaders(bs=2, after_item=[Resize(224), clip_tokenizer, ToTensor()], after_batch=[IntToFloatTensor()], device='cpu')

vitb32_config_dict = vitb32_config(224, clip_tokenizer.context_length, clip_tokenizer.vocab_size)

clip_model = CLIP(**vitb32_config_dict, checkpoint=False, checkpoint_nchunks=0)

learner = Learner(dls, clip_model, loss_func=noop, cbs=[CLIPTrainer(), ShortEpochCallback(0.001)],

metrics=[RetrievalAtK(k=5),

RetrievalAtK(k=20),

RetrievalAtK(k="mean"),

RetrievalAtK(k="median")])

learner.fit(1)

learner.recorder.losses